SAMPLE POSTS, [VRSK] Verisk Analytics

[VRSK – Verisk] High Quality Cash Flow, Limited Reinvestment Opportunities

When evaluating the competitive advantage of a data-based analytics business, three important questions come to mind: 1) Is the data proprietary? 2) Are the insights from the data critical? and 3) Does the data fuel a product feedback loop? Verisk began its operations in 1971 as Insurance Services Offices (ISO), a non-profit enterprise started by P&C insurers to collect industry data and information that was used by its sponsors to determine premium rates, underwrite risk, develop products, and report to regulators, basically acting as a cost center for the P&C industry. The entity expanded into analytics with its acquisitions of American Insurance Services in 1997 and the National Insurance Crime Bureau in 1998 (which brought expertise in claims fraud detection and prevention) before converting to a for-profit organization in 1999 and going public in 2009 as Verisk (ISO became wholly-owned subsidiary of Verisk). The company acquired a bunch of companies from its ISO days to today, bolstering its core insurance risk assessment services and expanding into new industry verticals. Verisk’s decades-long legacy as the central repository of P&C industry data is the heart and soul of its competitive advantage and has served as the foundation on which all its other offerings have been built over the years.

It is difficult to overstate the critical role that Verisk plays in pricing, claims management, and administrative efficiency across the P&C industry. For instance, Verisk sets the de facto industry standard on language – “court-tested” and found in 200mn of the 250mn policies issued in the US – used in policy forms sold to insurers via subscription that ensures consumers are getting the same amount of coverage for the same quote across insurers. The company sits at the center of a network that procures data from a wide variety of sources (claims settlements, remote imagery, auto OEMs), analyzes it, and delivers predictive insights to clients (insurers, advertisers, property managers). The agreements through which a customer licenses VRSK’s data also allows the company to make use of that customer’s data….so essentially the customer pays Verisk for a solution that costs almost nothing for the company to deliver and Verisk gets to use that customer’s data to enhance its own solutions, which improved solutions reduce churn and attract even more customers (and their data) in a subsidized feedback loop.

This flywheel, built on top of Verisk’s historical advantage as the repository of industry data, has generated world’s largest claims database (VRSK aggregates claims data from 95% of the P&C industry), containing granular information on 1.1bn claims (up from 700mn claims in 2011), with the insurance ecosystem submitting ~200k new claims a day across all P&C coverage lines. It would be effectively impossible for a competitor to replicate this ever-burgeoning flurry of data. [Aside: VRSK’s spending on public clouds has dramatically escalated over the last few years, allowing the company to not only realize considerable cost savings (without public clouds, it would have been far more costly for Verisk to secure engagements with European retail banks, who operate under strict privacy laws that require data to reside within the country of origin) and flexibility as its datasets continue to expand in girth and complexity, but to also apply machine learning to those datasets and enable new functionality to its services].

Comprehensive data paired with a decent model generates better insights than limited data paired with a great model. An insurer that relies solely on in-house claims experience cannot underwrite risks with nearly the same degree of accuracy as one with access to the entire industry’s data. Consider all the vehicle ratings variables – make & model, location where the car is garaged (down to one of 220k census blocks), mileage, driver’s record, semi-autonomous / safety features in the vehicle – that VRSK accounts for in assessing loss costs on 323 ISO series cars (cars that are part of the ISO ratings series used to match premiums to type of car). Or the construction costs – from roofing material to drywall to electrical and HVAC contractor rates – monitored across 460 regions across North America and updated monthly using Verisk’s Xactware software, which insurers use to quantify replacement costs, including labor and material costs, within 21k unit-cost line items in the event of a claim and compare computed insurance-to-value estimates to those submitted by brokers at the beginning of the underwriting process. A contractor who shows up to a damaged home after a storm can leverage the 100mn price points stored in Verisk’s database, estimate a policy claim, and then share that information with the policyholder, adjuster, and the claims department.

Like Verisk’s policy forms, Xactware, too, is an industry standard used to estimate nearly 90% of all personal property claims in the US. Data is further leveraged to improve efficiency and the front-end experience of insureds. For instance, per one case study, a large auto insurer typically spent 15 minutes walking a policy seeker through its sales funnel (an initial 40-question quote inquiry that transitioned to processing, where 35% of qualified leads had their initial quotes changed, and finally to binding), with lead leakage at each step along the way, ultimately translating into a conversion rate of just ~7%. With Verisk’s LightSpeed, the insurer spent less than a minute on the sales process and doubled its conversions. By sifting through a deluge of 300mn transactions per month pulled from odometer readings, vehicle reports, and claims loss history, Verisk only needs a few pieces of information from the customer upfront to arrive at the right price within seconds at the point of quote. With claims settlements absorbing 2/3 of the $600bn of premiums collected by the US P&C industry every year (not to mention the $6bn-$8bn of fraudulent auto injury claims), and the industry as a whole generating negligible underwriting margins over time, solutions that improve operating efficiency, improve sales outcomes, and accurately estimate the industry’s largest expense item, are obviously critical.

Verisk’s products are tightly integrated into customers’ workflows and consumed as subscriptions (subscriptions represent ~85% of the company’s revenue).

1) Is the transformed data proprietary? Check

2) Are the insights from the data critical? Check

3) Does the data fuel a feedback loop that deepens the data moat? Check.

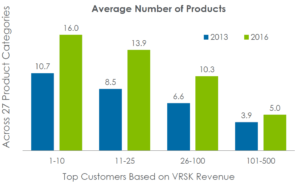

VRSK has a nice moat in the P&C vertical. But of course the best companies not only have dominant competitive advantages around their existing business, but also huge advantaged growth opportunities, and it is here, I’m afraid, that prospects look bleaker. Here are what management sees as its growth opportunities: Cross-selling: Selling existing products to new customers and introducing new products to existing ones. This is the most compelling opportunity from a probability-of-success standpoint and has motivated much of the recurring tuck-in acquisitions made by the company over the years. Over the last several years, the company has re-oriented how it approaches the customer, moving away from a siloe’d approach to product sales to integrated teams: most of the company’s sales to insurers are bundled products that improve customer stickiness while boosting revenue and sales personnel are compensated on product sales made across both reporting segments (Decision Analytics and Risk Assessment).

New verticals: Repurposing existing IP and capabilities to breach new industry verticals seems reasonable in theory, but has had only so-so results in practice. For instance, in 2004 the company acquired its way into healthcare (reporting systems and analytical tools for health insurers) where it could bring its expertise to bear in a big market beleaguered by hundreds of billions of dollars of annual fraudulent claims. The company also thought that understanding the healthcare market would give it greater insight into workers comp, which constitutes 20% of the P&C market and where rising medical costs constituted a growing portion of claims. Management pushed further into the space with significant healthcare acquisitions in each of 2010, 2011, and 2012, with seemingly robust growth for several years before healthcare revenue suddenly contracted in 2015. Then, in a sudden about-face, the company put Verisk Health up for sale in late 2015, blaming a regulatory and industry structure that made it hard to acquire unique data assets. Another example. In 2005, Verisk acquired its way into the mortgage sector under the premise that personal property data collected in the P&C business could be leveraged to detect fraud in the mortgage lifecycle only to divest this business in March 2014. Based on my experience, companies that acquire a bunch of companies only to divest them years later usually leave huge craters in shareholder value. That is not the case here. Verisk Health, sold for $820mn to Veritas Capital, realized a 12% pre-tax IRR during the company’s 12-year ownership period while the $155mn sale of Interthinx to First American implied a 15%+ annual return. While both businesses failed to hit the company’s 20% hurdle rate, they at least met a reasonable cost of capital threshold and if you were feeling charitable, you might even credit management for explicitly considering return requirements at all. The takeaway from these stories would seem to be that the company optimizes returns on capital when it reinvests in its core P&C business….which is why Verisk’s $2.8bn cash/stock acquisition in May 2015 of Wood Mackenzie, a subscription-based provider of data analytics and commercial intelligence for the hydrocarbons industry (with 99% customer retention growing ~10% organically at the time of acquisition) was a head-scratcher to me. Wood Mac is a mission critical application for anyone involved in the oil and gas industry (E&P companies, investors, banks) who needs to stay on top of the supply curve and understand the productivity of various oil projects around the globe.

According to management, there were some immediate cross-sell gets between WoodMac and Verisk Maplecroft (country risk monitoring), but the latter was a really tiny business. Apparently, the bigger, medium-term opportunity is introducing WoodMac’s data assets in the energy sector to its P&C insurance customer base, but for the time being this looks more like a standalone data business with an independent growth vector and over the last year, management has acquired several more hydrocarbon data companies to bolt onto WoodMac. After 2 quarters of growth following the acquisition, WoodMac has experienced y/y declines in each of the last 5 quarters on end market weakness and currency headwinds. So, on the surface, it looks like the company paid an 18x multiple on peak EBITDA (vs. the 9x that it has historically paid for acquisitions) for a good business, but one with questionable synergies. And then there’s this third vertical, Financial Services, within the Data Analytics segment that provides competitive benchmarking, analytics, and measurement of multi-channel marketing campaigns for financial institutions around the world, including 28 of the top 30 credit card issuers in North America. With its consortium-fed depersonalized data sets (which management claims is the most comprehensive in the payments space with a view into millions of merchants, billions of accounts, and trillions of transactions – including PoS and online transactions – tracked daily), the company offers “enhanced marketing” and risk management solutions to clients.

While the acquisitions of Verisk Health, WoodMac, and to a lesser degree Financial Services do indeed bring proprietary datasets into the company’s fold, they more mundanely resolve the problem that all great but maturing business run into, which is what to do with all the cash flow given limited reinvestment opportunities in the core business. To be clear, these acquired verticals seem like “moaty” businesses in their own right, but synergies with the core P&C vertical are murky or non-existent, so it’s unclear to me why these businesses have much more value inside Verisk than as standalone companies….and they certainly weren’t acquired at distressed, opportunistic prices.

Also, given all the big talk around ROIC metrics and the company’s recurring acquisition activity, it’s a bit irksome that management’s comp is tied to pedestrian measures like total revenue growth (at least it’s organic growth) and EBITDA margins. Anyhow, I’ve spent too much time on this topic as the core Insurance business still constitutes 70% of revenue and even more of total profits and in any case, if history is any guide, management will dispassionately evaluate these segments against target IRRs. According to management, from 2002 to 2015, the company has earned a 19% annualized return on its acquisitions (assuming a 10x exit EBITDA multiple) and a 15% return on share repurchases.

International expansion: The problem with unique data sets is that they’re, well, unique. P&C insurance is a regional market with asset types, regulatory regimes, and demographics that vary widely by country and so the robust dataset that Verisk has spent decades building in the US has little relevance overseas. As even management will admit, the international opportunity is more aptly characterized as “multi-domestic.” Furthermore, for the most part, insurance companies overseas have less sophisticated workflows compared to US insurers, and so the product bundles are slimmer and require a more consultative sales approach (i.e. they cost more to sell). The quality of the business is adequately represented by VRSK’s profitability: 50% EBITDA margins that, depending on acquisition activity, go up a little or down a little each year, but have certainly expanded over time (from 44% in 2011 and 48% in 2012).

The 70% of revenue coming from the P&C industry should basically reflect economic growth (+lsd), share gain, and cross-selling (+lsd) offset by modest contraction from customer consolidation, so call it +5%-6%. Financial services and energy, the other 30% of revenue, grows at maybe 10%-15%, blending out to something like 8%-10% growth on a consolidated basis, which maybe translates into low double-digit growth after baking in buybacks. At 27x trailing earnings, valuation seems uninspiring relative to other high quality businesses that trade at similar valuations but have far bigger growth opportunities to boot (MA and V come to mind). There are weighty long-term risks to consider as well. For instance, mass Level 5 adoption will not only reduce the number of vehicles on the road and accidents but re-define value capture within the auto ecosystem. That’s still probably at least a decade out, but on the way, improving advanced driver assistance systems that dramatically reduce accident rates (this reduction will happen quicker than some may think because of the salutary knock-on effects that equipping one vehicle with ADAS has on others. I think we’re in this moment now where the rate distracted driving has exceeded the rate at which these systems have improved, leading to a recent uptick in vehicle accidents, but the trend will resume is southerly course in due time) may reset accident curves and impugn the relevance of historical datasets, carving horizontal inroads for competitors with more generic big data and machine learning capabilities who can create new, more relevant datasets from sensor data.